#SQL Pivot

Explore tagged Tumblr posts

Text

Pivot Table 101

Creating a pivot table in Excel allows you to summarize and analyze large datasets in a concise format. Here’s a step-by-step guide on how to create a pivot table: 1. Prepare Your Data Ensure your data is organized: Your data should be in a table format, with each column having a header/name at the top. Avoid blank rows and columns: This ensures the pivot table includes all your data…

View On WordPress

0 notes

Text

3 Benefits of an AI Code Assistant

Artificial intelligence (AI) has revolutionized many industries in recent years. In the world of software development, it's helping professionals work faster and more accurately than ever before. AI-powered code assistants help developers write and review code.

The technology is versatile, generating code based on detailed codebase analysis. It can also detect errors, spot corruption and more. There are many benefits to using a code assistant. Here are some of the biggest.

More Productivity

What software professional doesn't wish they could work faster and more efficiently? With an AI code assistant, you can. These assistants can streamline your workflow in many ways.

One is by offering intelligent suggestions to generate new code for you. AI assistants do this by analyzing your codebase and learning its structure, syntax and semantics. From there, it can generate new code that complements and enhances your work.

Save Time

AI assistants can also automate the more repetitive side of software development, allowing you to focus on other tasks. Coding often requires you to spend far more time on monotonous work like compilation, formatting and writing standard boilerplate code. Instead of wasting valuable time doing those tasks, you can turn to your AI assistant.

It'll take care of the brunt of the work, allowing you to shift your focus on writing code that demands your attention.

Less Debugging

Because assistants are entirely AI-powered, the code they generate is cleaner. You don't have to worry about simple mistakes due to a lack of experience or the issue of human error.

But that's not all. AI assistants can also help with error detection as you work. They can spot common coding errors like syntax mistakes, type mismatches, etc. Assistants can alert you to or correct problems automatically without manual intervention. When it comes time to debug, you'll save hours of time thanks to the assistant's work.

Many developers are also using the technology for code refactoring. The AI will identify opportunities to improve the code, boosting its readability, performance and maintainability.

Read a similar article about enterprise Python integration here at this page.

0 notes

Text

Demystifying SQL Pivot and Unpivot: A Comprehensive Guide

CodingSight

Demystifying SQL Pivot and Unpivot: A Comprehensive Guide

In the realm of data management and analysis, SQL Pivot and Unpivot operations shine as invaluable tools that enable the transformation of complex datasets into meaningful insights. This article is a deep dive into the world of SQL Pivot and Unpivot, providing a comprehensive understanding of their functions, applications, and when to employ them.

Defining SQL Pivot

SQL Pivot is a data manipulation technique that revolves around the reorganization of rows into columns, facilitating a shift from a "long" format to a more reader-friendly "wide" format. This operation streamlines data analysis and reporting, making it indispensable in numerous scenarios.

The Mechanism Behind SQL Pivot

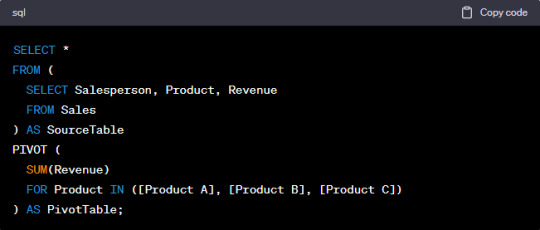

To execute a Pivot operation, you must specify the columns that should become new columns in the output, along with the values that will fill these columns. Typically, an aggregate function is employed to compute values for each new column. Here's a simple example to illustrate this:

In this instance, data is pivoted to display total revenue for each product and salesperson. The SUM function serves to aggregate the revenue values.

When to Employ SQL Pivot

SQL Pivot is the ideal choice when you need to transform data from a long format into a wide format for enhanced reporting or analysis. Common use cases include:

Generating sales and revenue reports, as exemplified above.

Creating cross-tabulated reports for survey data.

Converting timestamped data into time series data for trend analysis.

Understanding SQL Unpivot

Exploring SQL Unpivot

SQL Unpivot operates as the antithesis of Pivot. It reverses the process by transforming wide-format data back into a long format, which is more conducive to specific analytical tasks. Unpivot is used to normalize data for further processing or to simplify the integration of data from diverse sources.

The Mechanics of SQL Unpivot

Unpivot works by selecting a set of columns to convert into rows. You must also specify the column that will store values from the chosen columns and the column that will hold the original dataset's column names. Here's an illustrative example:

In this example, the data is unpivoted, returning to its original long format. The "Product" column values become new rows, while the "Revenue" column houses the corresponding values.

When to Utilize SQL Unpivot

SQL Unpivot is indispensable when you need to normalize data or amalgamate information from multiple sources with varying structures. Common use cases include:

Analyzing survey data collected in a cross-tabulated format.

Merging data from different departments or databases characterized by distinct column structures.

Preparing data for machine learning algorithms that require specific input formats.

Conclusion

SQL Pivot and Unpivot operations emerge as indispensable assets in the realm of data manipulation and analysis. Pivot facilitates the transformation of data for improved reporting and analysis, while Unpivot streamlines data normalization and the integration of disparate information sources. Mastery of these techniques empowers data analysts, business intelligence professionals, and data scientists to unlock the full potential of their data, thereby enabling better decision-making and the revelation of hidden insights. Whether you're a seasoned data professional or just embarking on your data journey, SQL Pivot and Unpivot operations are vital tools to have at your disposal, ready to reveal the true potential of your data. Start exploring their capabilities today and embark on a data-driven journey of discovery and transformation.

0 notes

Text

Unlocking the Full Power of Apache Spark 3.4 for Databricks Runtime!

You've dabbled in the magic of Apache Spark 3.4 with my previous blog "Exploring Apache Spark 3.4 Features for Databricks Runtime", where we journeyed through 8 game-changing features

You’ve dabbled in the magic of Apache Spark 3.4 with my previous blog “Exploring Apache Spark 3.4 Features for Databricks Runtime“, where we journeyed through 8 game-changing features—from the revolutionary Spark Connect to the nifty tricks of constructing parameterized SQL queries. But guess what? We’ve only scratched the surface! In this sequel, we’re diving deeper into the treasure trove of…

View On WordPress

#Apache Spark#Azure Databricks#Azure Databricks Cluster#Data Frame#Databricks#databricks apache spark#Databricks SQL#Memory Profiler#NumPy#performance#Pivot#pyspark#PySpark UDFs#SQL#SQL queries#SQL SELECT#SQL Server

0 notes

Text

If tumblr's going to go full on into imitating other social networks we really need the ability to multiple-like posts even if it's only aesthetic. Blog A and B both reblog the same post on the same day. I can only like it once, because every instance of that same post will appear as liked on my dash. Either give both A and B the 'person liked this post!' notification from me liking either of them, or let me like both of them individually (while only adding +1 to the liked post, hence the 'only aesthetic' bit) so they both get it anyways. This would effectively cut off the dumb race-to-be-first stuff that shows up so often on other networks. Everyone gets their dopamine, nobody loses, and since the extra likes are just aesthetic people can't bot it to farm likes.

@staff please we're begging you, this is the only good social media left, give us this stuff to make it better instead of flattening reblogs and effectively wiping out the site's history.

Before Tumblr gets more into algorithmic content, the "For you" tab needs to two buttons under each post:

1. Why the fuck did you think I'd like this?

2. Never even think of showing me this shit ever again

#or hire me i'll do it even if it involves dealing with pivot tables or nonsensical sql hell#REALLY don't want this place to die

2K notes

·

View notes

Text

on wanting to do a million things

prompted by @bloodshack 's

i wanna learn SQL but i wanna learn haskell but i wanna learn statistics but i wanna start a degree in macroeconomics also sociology also library science but i wanna learn norwegian but i wanna learn mandarin but i wanna paint but i wanna do pottery but i wanna get better at woodworking but i wanna get better at cooking but i wanna bake one of those cakes that's just 11 crepes stacked on top of each other but i wanna watch more movies but i wanna listen to more podcast episodes but i need to rest but i need to exercise but i wanna play with my dog but i wanna go shopping but i need to go grocery shopping but i need to do the dishes but i need to do laundry but i need to buy a new x y and z but i need to save money but i wanna give all my money away to people who need it more but i wanna pivot my career to book editing but to do that i have to read more and i wanna read more nonfiction but i wanna read more novels but i wanna get better at meditating but i wanna volunteer but i wanna plan a party but i wanna go to law school. but what im gonna do is watch a dumbass youtube video and go to bed

I think I've been doing slightly better this year about Actually Doing Things. not great! but I do a lot and I've been "prototyping" ways to get closer to doing as much as is possible. and if I actually talk about it it's a bunch of very obvious statements but I'll try to make them a little more concrete

rule number one: experiment on yourself

there's no one approach that's right for everyone and there's not even one approach for me that works at all times. try things out. see what works. pay attention to what doesn't. try something else.

rule number two: ask what's stopping you and then take it seriously

example: I often want to do Everything in the evening at like 2 PM, but then get home and am tempted sorely by the couch, and then get stuck inertia'd and not doing much but being tired and kind of bored. why?

if I don't have plans, it's easy to leave work later than planned and hard to make myself do something by a specific time

i'm generally tiredish after work. 4 out of 5 times, that'll go away if I actually start Doing Something, but 1 out of 5 it's real and I will go hardcore sleepmode at 8 PM and just be Done

i use up a ton of my program management/executive function/Deciding Things brain at work and usually find it noticeably harder to string together "want to do Thing > make list of Things > decide on a Thing > do Thing" after I'm home. Even if I have a list of Things to Do, how does one decide! how does one start! and god forbid there's a Necessary thing. then it's all downhill

therefore, mitigations: have concrete time-specific plans in advance.

if I have an art class at 6:00 PM I need to leave work by 5:15 and NO LATER and I can't get sucked into "oh 10 more minutes to finish this" *one hour later*

that also means I have to have a fridge or freezer dinner ready and can't spend 45 minutes cooking "fuck it, what the hell did I put in the fridge, why don't we have soy sauce" evil meal that is not good

plans with friends: dinner! art night! music night! repair-your-clothes night! seeing a show! occasionally, Accountability Time where a friend comes over for We Are Doing Tasks with tea and snacks etc.

for some reason I'm way better about Actually Doing Things when the plan exists already. magically I overcome couch inertia even though I am the same amount of tired! and while I never learn the ability to decouch without plans I at least learn to make them

still working on:

a "prototype" for maybe next month is a weeklyish Study Session for a thing I want to learn about. I want to somehow make it employer-proof (I am accountable to some entity to being at place X at time Y) and haven't figured out a good way. Maybe I can leverage that the local library is open til 8 on wednesdays and somehow make it a Thing? maybe I'll try it!

oh god oh fuck the thing about plans is that if you want to have them you need to make them. christ. a lot of the time I can cover this with some combo of weekend planning + recurring events (things like weekly friend dinner/weekly class) + having cool friends who reach out proactively but it still requires active planning and it can fall thru the cracks

rule three: cool friends

they can take you to things

they can remind you that you can do whatever the fuck you please

i have a friend who is somehow Always doing cool classes and learning shit. and this reminds me that I can ... do that. and sometimes I do

you can take them to things!!

rule four: try to kill the anon hate in your head

obv this depends on your circumstance but sometimes it's worth it to me to look at constraints that "feel real" and check whether they're an active choice I made thoughtfully or, like, the specters of people I don't know judging my choices

time and money are obvious ones. recently was gently nudged towards looking at whether i could give myself more time to Do Things by cooking less. imaginary specters of judgmental twitterites: "it's illegal to spend money. if you get takeout you're the first up against the wall when the revoution comes. make all your lunches and dinners and hoard the money for Later. for Something. how dare you get lunch at the store. you bourgeois hoe. taking charity donations from the mouths of the poor cause you don't have your life together enough to cook artisanal bespoke dinners every night. fuck you." and obviously eating takeout 24/7 is not the answer, but realizing I was not making an active choice helped me try making the active choice instead. "how much do I actually want to balance cost, time, tastiness, and wastefulness of my food, given my amount of free time and my salary and the tradeoff against doing something else? can I approach it differently to do more quick cheap food + some takeout?" -> current prototype: substitute in 1 takeout dinner or restaurant-with-friends a week, 1 frozen type dinner, and then batch cook or sandwiches lunches w/ "permission" to get fast lunch at the store. we'll see how it goes!

i am really really bad at this and find it helpful to talk to other people who can help point out when I'm being haunted by ghosts about it.

rule five: what would it take? what's the next step?

this one i give a lot of credit to @adiantum-sporophyte in particular for, especially for prompting me with questions when I muse about the million-ideal-lives on car rides. what would it look like to do xyz? what's something I could do right now to move in that direction? what's the obstacle? like, actually ask the question and think through it. with a person talking to you! damn! maybe the obstacle to x is that I don't know if I'll like it or if I just like the idea of it. and I don't want to commit to x without knowing. Okay, so maybe an approach would be to find someone who does x and talk to them about how their life is, or maybe it's "spend 15 minutes looking up intro-to-x near me", or "actively schedule 1 instance of x", or something like that. Or maybe it's that I don't know what it takes to do x. Okay, how about on Tues after dinner Adiantum fixes a sweater at my apartment while I spend 20 min looking at prereqs for x. like, it's so basic to say "to do a thing, you could try figuring out how to do it" but I think the important thing here is the feedback/prompting to even recognize "hey, step back, if you don't know the next step then figuring out the next step is the next step"

rule six: habits

prototyping: exercise

I do a lot better when I exercise in the mornings. I do a lot better when I do PT exercises regularly. For a while I was doing PT with friend in the morning every morning before work (accountability! a friendly face to make it more pleasant!) but that didn't really solve - it's not the kind of exercise that makes me feel awake/active, it's like dumb little foot botherings. but: having the habit of morning exercise made it easier to swap out 2 of the 5 days for more intense exercise, and then to swap those 2 for a different more intense exercise when I needed a break. it's easier to build a low-effort version of the habit and then work in the higher-effort one than to just Decide to be the kind of person who gets up at ass o clock to do cardio or whatever

rule seven: set up the structure of your life to make it easy

this is also a "duh" thing but like. on so many levels it comes down to structure your life to make the choice more doable. this can be something like "i structure my life to make vegetarian cooking baseline and vegan cooking the majority by stocking the pantry with staples and spices from cuisines that work well that way" or "i chose an apartment that lets me commute by bike" or "i have my camping gear put away in a fashion that makes it easier to gather frequently and lowers the barrier to trips" or "i keep physical books around to prompt myself to read xyz" to "i don't use instagram or twitter or snapchat or facebook" to . idk.

and in terms of charitable giving: similar deal. I have an explicit budget at the beginning of the year (~10% of my before-tax income), I know in advance what charities I give to, and I know what timing I will use (basically, alerts for donation matching around specific fundraising times). Anything outside the Plan comes from my discretionary budget/fun money. That makes it less of a mental load (the choice is already made; I don't grapple with every donation request or every bleeding-heart trap because I have a very solid anchor on "I give to xyz, the money's set aside") and it's armor against impulsive-but-not-useful scrupulosity. I structure the rest of my spending/life to prioritize a set amount and it makes it easier to follow through

rule eight: if you can do it at work a tiny bit that counts for real life

(infrequently used)

"hi mr. manager I think it would be great if I could use enough SQL to make basic queries in the database so we don't have to go through the software team for common/basic questions. I'd like to take 1 hr on Friday to go through some basic tutorials and then 1 hr with Pat on Monday so he can walk me through an intro for our specific use case. I estimate this will help save the team a couple hours a week of waiting for answers from the other team." and then you have enough of a handle with baby's first SQL that you can add little bits and bobs as you exercise it. this is responsible for a medium amount of my knowledge of python and all 3 brain cells worth of SQL.

rule nine: life is an optimization problem

not in, like, "you need to optimize your skincare and career and exercise and social life and have everything all at once" that's not what optimization means. optimization is like, maximize something with respect to a set of constraints. i explicitly Do Not do skincare beyond "wash face" and "sunscreen" bc I want to optimize my life for like looking at weird plants in the mountains. explicitly choosing to put time and money elsewhere! can't have it all all at once. so fuck them pores. who give a shit. yeah i ate a lot of protein shakes instead of home cooked breakfasts this week bc i was prioritizing morning exercise. im looking at this beautiful bug and it doesn't know what fashion is or what my resume looks like. im holding a lizard. im not spending time on picking cool clothes or whatever bc i spent that time looking up lizard hotspots on purpose.

that's really long and probably mostly, like, not surprising? but i keep benefiting from ppl being like "hey have you considered Obvious Thing" framed very gently

99 notes

·

View notes

Text

Future of LLMs (or, "AI", as it is improperly called)

Posted a thread on bluesky and wanted to share it and expand on it here. I'm tangentially connected to the industry as someone who has worked in game dev, but I know people who work at more enterprise focused companies like Microsoft, Oracle, etc. I'm a developer who is highly AI-critical, but I'm also aware of where it stands in the tech world and thus I think I can share my perspective. I am by no means an expert, mind you, so take it all with a grain of salt, but I think that since so many creatives and artists are on this platform, it would be of interest here. Or maybe I'm just rambling, idk.

LLM art models ("AI art") will eventually crash and burn. Even if they win their legal battles (which if they do win, it will only be at great cost), AI art is a bad word almost universally. Even more than that, the business model hemmoraghes money. Every time someone generates art, the company loses money -- it's a very high energy process, and there's simply no way to monetize it without charging like a thousand dollars per generation. It's environmentally awful, but it's also expensive, and the sheer cost will mean they won't last without somehow bringing energy costs down. Maybe this could be doable if they weren't also being sued from every angle, but they just don't have infinite money.

Companies that are investing in "ai research" to find a use for LLMs in their company will, after years of research, come up with nothing. They will blame their devs and lay them off. The devs, worth noting, aren't necessarily to blame. I know an AI developer at meta (LLM, really, because again AI is not real), and the morale of that team is at an all time low. Their entire job is explaining patiently to product managers that no, what you're asking for isn't possible, nothing you want me to make can exist, we do not need to pivot to LLMs. The product managers tell them to try anyway. They write an LLM. It is unable to do what was asked for. "Hm let's try again" the product manager says. This cannot go on forever, not even for Meta. Worst part is, the dev who was more or less trying to fight against this will get the blame, while the product manager moves on to the next thing. Think like how NFTs suddenly disappeared, but then every company moved to AI. It will be annoying and people will lose jobs, but not the people responsible.

ChatGPT will probably go away as something public facing as the OpenAI foundation continues to be mismanaged. However, while ChatGPT as something people use to like, write scripts and stuff, will become less frequent as the public facing chatGPT becomes unmaintainable, internal chatGPT based LLMs will continue to exist.

This is the only sort of LLM that actually has any real practical use case. Basically, companies like Oracle, Microsoft, Meta etc license an AI company's model, usually ChatGPT.They are given more or less a version of ChatGPT they can then customize and train on their own internal data. These internal LLMs are then used by developers and others to assist with work. Not in the "write this for me" kind of way but in the "Find me this data" kind of way, or asking it how a piece of code works. "How does X software that Oracle makes do Y function, take me to that function" and things like that. Also asking it to write SQL queries and RegExes. Everyone I talk to who uses these intrernal LLMs talks about how that's like, the biggest thign they ask it to do, lol.

This still has some ethical problems. It's bad for the enivronment, but it's not being done in some datacenter in god knows where and vampiring off of a power grid -- it's running on the existing servers of these companies. Their power costs will go up, contributing to global warming, but it's profitable and actually useful, so companies won't care and only do token things like carbon credits or whatever. Still, it will be less of an impact than now, so there's something. As for training on internal data, I personally don't find this unethical, not in the same way as training off of external data. Training a language model to understand a C++ project and then asking it for help with that project is not quite the same thing as asking a bot that has scanned all of GitHub against the consent of developers and asking it to write an entire project for me, you know? It will still sometimes hallucinate and give bad results, but nowhere near as badly as the massive, public bots do since it's so specialized.

The only one I'm actually unsure and worried about is voice acting models, aka AI voices. It gets far less pushback than AI art (it should get more, but it's not as caustic to a brand as AI art is. I have seen people willing to overlook an AI voice in a youtube video, but will have negative feelings on AI art), as the public is less educated on voice acting as a profession. This has all the same ethical problems that AI art has, but I do not know if it has the same legal problems. It seems legally unclear who owns a voice when they voice act for a company; obviously, if a third party trains on your voice from a product you worked on, that company can sue them, but can you directly? If you own the work, then yes, you definitely can, but if you did a role for Disney and Disney then trains off of that... this is morally horrible, but legally, without stricter laws and contracts, they can get away with it.

In short, AI art does not make money outside of venture capital so it will not last forever. ChatGPT's main income source is selling specialized LLMs to companies, so the public facing ChatGPT is mostly like, a showcase product. As OpenAI the company continues to deathspiral, I see the company shutting down, and new companies (with some of the same people) popping up and pivoting to exclusively catering to enterprises as an enterprise solution. LLM models will become like, idk, SQL servers or whatever. Something the general public doesn't interact with directly but is everywhere in the industry. This will still have environmental implications, but LLMs are actually good at this, and the data theft problem disappears in most cases.

Again, this is just my general feeling, based on things I've heard from people in enterprise software or working on LLMs (often not because they signed up for it, but because the company is pivoting to it so i guess I write shitty LLMs now). I think artists will eventually be safe from AI but only after immense damages, I think writers will be similarly safe, but I'm worried for voice acting.

8 notes

·

View notes

Text

Long Time No See!

Sorry I've been radio silent for so long! I've been super busy. I don't even remember when my last post was.

Anyway, more under the cut

I haven't really got much time to code. I started my new job back in January and it's taken up a lot of my time. I've also had to adjust to working again and balancing both my home/work life. Including my own happiness and yada yada. Which has led to not many streams and other things too.

As for coding, I pivoted to Javascript and SQL, but I've had to shelf that too. So I'm not sure I'll be keeping this as a coding blog. We'll see.

As to why? It's mostly for work reasons. I've been trying to figure out what I need to focus on, and to stay at my current company and climb the ladder, it's better for me to pivot over to IT Certifications. I really like this company and I'd like to stay, so coding will probably be on the back burner.

There's a lot of stuff I want to do, and trying to find time for it all is really hard. You gotta figure out where to sacrifice stuff, and that's been a challenge. Especially if I want to be happy and sane.

This may just become a blog blog, or a tech blog, but we'll see.

Anyway, that's all I got for now. I'm studying for the A+ cert and then making my way into the Network Cert or Security. I haven't decided. I hope you all are staying wonderful. +2 to your next Charisma check!

4 notes

·

View notes

Text

using a sql or excel mindset overcomplicates things soooo much in power bi. i keep finding myself trying to do all kind of setup to prep data so it'll cooperate with how I want to use it and then I realize i just gotta drag one value onto another to connect em or something else easy

i have somewhat of a tough time pivoting between programs. it reminds me of how i can only really remember how to knit OR how crochet. just one at a time, not both. when I switch from one to the other I gotta hunker down and really focus to remember the basics again lol

#dealing with dates was an actual nightmare before my new boss told me to use date tables though#power bi manages dates a thousand times better than excel but without knowing about using date tables... hoo boy#was kinda fucked

3 notes

·

View notes

Note

As a big data nerd myself, I *loved* seeing your fic reading stats!! Do you use software to track or some kind of spreadsheet?

Thank you! I have a google sheet I enter every fic I read into, and separate tabs in that sheet that show both data overall and data per month (stuff like longest/shortest fic, top authors and tags that month etc). That gives me most of the sorting and visualizing I need, since you can do some SQL querying in google sheets as well! For the pivot chart and histogram I had to turn to excel, though, as they required more customization options that google sheets has! I started tracking my fic reading early fall 2022, so by now I have a couple of years of data! :)

#ask frida#sharpbutsoft#i originally got the template from maria but i've modified it pretty heavily since then

2 notes

·

View notes

Text

SQL Server Pivot is a transformative tool that offers a dynamic approach to reshaping and restructuring data in the ever-evolving field of database management. Let's Explore Deeply:

https://madesimplemssql.com/sql-server-pivot/

Please follow us on FB: https://www.facebook.com/profile.php?id=100091338502392

OR

Join our Group: https://www.facebook.com/groups/652527240081844

2 notes

·

View notes

Text

The Skills I Acquired on My Path to Becoming a Data Scientist

Data science has emerged as one of the most sought-after fields in recent years, and my journey into this exciting discipline has been nothing short of transformative. As someone with a deep curiosity for extracting insights from data, I was naturally drawn to the world of data science. In this blog post, I will share the skills I acquired on my path to becoming a data scientist, highlighting the importance of a diverse skill set in this field.

The Foundation — Mathematics and Statistics

At the core of data science lies a strong foundation in mathematics and statistics. Concepts such as probability, linear algebra, and statistical inference form the building blocks of data analysis and modeling. Understanding these principles is crucial for making informed decisions and drawing meaningful conclusions from data. Throughout my learning journey, I immersed myself in these mathematical concepts, applying them to real-world problems and honing my analytical skills.

Programming Proficiency

Proficiency in programming languages like Python or R is indispensable for a data scientist. These languages provide the tools and frameworks necessary for data manipulation, analysis, and modeling. I embarked on a journey to learn these languages, starting with the basics and gradually advancing to more complex concepts. Writing efficient and elegant code became second nature to me, enabling me to tackle large datasets and build sophisticated models.

Data Handling and Preprocessing

Working with real-world data is often messy and requires careful handling and preprocessing. This involves techniques such as data cleaning, transformation, and feature engineering. I gained valuable experience in navigating the intricacies of data preprocessing, learning how to deal with missing values, outliers, and inconsistent data formats. These skills allowed me to extract valuable insights from raw data and lay the groundwork for subsequent analysis.

Data Visualization and Communication

Data visualization plays a pivotal role in conveying insights to stakeholders and decision-makers. I realized the power of effective visualizations in telling compelling stories and making complex information accessible. I explored various tools and libraries, such as Matplotlib and Tableau, to create visually appealing and informative visualizations. Sharing these visualizations with others enhanced my ability to communicate data-driven insights effectively.

Machine Learning and Predictive Modeling

Machine learning is a cornerstone of data science, enabling us to build predictive models and make data-driven predictions. I delved into the realm of supervised and unsupervised learning, exploring algorithms such as linear regression, decision trees, and clustering techniques. Through hands-on projects, I gained practical experience in building models, fine-tuning their parameters, and evaluating their performance.

Database Management and SQL

Data science often involves working with large datasets stored in databases. Understanding database management and SQL (Structured Query Language) is essential for extracting valuable information from these repositories. I embarked on a journey to learn SQL, mastering the art of querying databases, joining tables, and aggregating data. These skills allowed me to harness the power of databases and efficiently retrieve the data required for analysis.

Domain Knowledge and Specialization

While technical skills are crucial, domain knowledge adds a unique dimension to data science projects. By specializing in specific industries or domains, data scientists can better understand the context and nuances of the problems they are solving. I explored various domains and acquired specialized knowledge, whether it be healthcare, finance, or marketing. This expertise complemented my technical skills, enabling me to provide insights that were not only data-driven but also tailored to the specific industry.

Soft Skills — Communication and Problem-Solving

In addition to technical skills, soft skills play a vital role in the success of a data scientist. Effective communication allows us to articulate complex ideas and findings to non-technical stakeholders, bridging the gap between data science and business. Problem-solving skills help us navigate challenges and find innovative solutions in a rapidly evolving field. Throughout my journey, I honed these skills, collaborating with teams, presenting findings, and adapting my approach to different audiences.

Continuous Learning and Adaptation

Data science is a field that is constantly evolving, with new tools, technologies, and trends emerging regularly. To stay at the forefront of this ever-changing landscape, continuous learning is essential. I dedicated myself to staying updated by following industry blogs, attending conferences, and participating in courses. This commitment to lifelong learning allowed me to adapt to new challenges, acquire new skills, and remain competitive in the field.

In conclusion, the journey to becoming a data scientist is an exciting and dynamic one, requiring a diverse set of skills. From mathematics and programming to data handling and communication, each skill plays a crucial role in unlocking the potential of data. Aspiring data scientists should embrace this multidimensional nature of the field and embark on their own learning journey. If you want to learn more about Data science, I highly recommend that you contact ACTE Technologies because they offer Data Science courses and job placement opportunities. Experienced teachers can help you learn better. You can find these services both online and offline. Take things step by step and consider enrolling in a course if you’re interested. By acquiring these skills and continuously adapting to new developments, they can make a meaningful impact in the world of data science.

#data science#data visualization#education#information#technology#machine learning#database#sql#predictive analytics#r programming#python#big data#statistics

14 notes

·

View notes

Text

Unlocking Data Insights with SQL Pivot and Unpivot: A Comprehensive Guide

CodingSight

In today's data-driven world, the ability to transform and analyze data efficiently is crucial for making informed decisions. SQL Pivot and Unpivot operations are powerful tools that can help you restructure and manipulate data, enabling you to extract valuable insights from complex datasets. In this article, we will delve into the world of SQL Pivot and Unpivot, explaining what they are, how they work, and when to use them.

What is SQL Pivot?

SQL Pivot is a data transformation operation that allows you to rotate rows into columns, essentially changing the data's orientation. This is particularly useful when you have data in a "long" format and need to display it in a more readable "wide" format. Pivot can simplify data analysis and reporting, making it a valuable technique in various scenarios.

How does SQL Pivot Work?

To perform a Pivot operation, you need to specify the columns that you want to become new columns in the output, along with the values that will populate those columns. You typically use an aggregate function to calculate the values for each new column. Here's a simplified example:

In this example, we are pivoting the data to display total revenue for each product and each salesperson. The SUM function is used to aggregate the revenue values.

When to Use SQL Pivot

SQL Pivot is ideal when you need to transform data from a long format to a wide format for reporting or analysis. Common use cases include:

Sales and revenue reporting, as demonstrated above.

Creating cross-tabular reports for survey data.

Converting timestamped data into time series data for trend analysis.

Understanding SQL Unpivot

What is SQL Unpivot?

SQL Unpivot is the reverse of Pivot. It allows you to transform data from a wide format back into a long format, which can be more suitable for certain analytical tasks. Unpivot can be used to normalize data for further processing or to simplify data integration from multiple sources.

How does SQL Unpivot Work?

Unpivot works by selecting a set of columns to transform into rows. You also specify the column that will hold the values from the selected columns and the column that will hold the column names from the original dataset. Here's a simplified example:

In this example, we are unpivoting the data to revert to the original long format. The "Product" column values become the new rows, and the "Revenue" column holds the corresponding values.

When to Use SQL Unpivot

SQL Unpivot is valuable when you need to normalize data or when you want to combine data from different sources with varying structures. Some common use cases include:

Analyzing survey data collected in a cross-tabular format.

Combining data from different departments or databases with different column structures.

Preparing data for machine learning algorithms that require a specific input format.

Conclusion

SQL Pivot and Unpivot operations are indispensable tools for data transformation and analysis. Pivot allows you to reshape data for clearer reporting and analysis, while Unpivot helps you normalize data and integrate information from diverse sources. By mastering these techniques, you can harness the full potential of your data, enabling better decision-making and insights. Whether you are a data analyst, a business intelligence professional, or a data scientist, these SQL operations will prove to be valuable assets in your toolkit. Start exploring their capabilities today, and unlock the hidden potential of your data.

0 notes

Text

The Vital Role of Windows VPS Hosting Services in Today’s Digital World

In the fast-paced, ever-evolving digital landscape, businesses and individuals alike are in constant pursuit of reliability, speed, and efficiency. One technological marvel that has been increasingly pivotal in achieving these goals is Windows VPS (Virtual Private Server) hosting services. These services offer a robust and versatile solution that caters to a wide range of needs, from small business operations to large-scale enterprises. But what makes Windows VPS hosting services so indispensable? Let's dive in.

1. Unmatched Performance and Reliability

When it comes to performance, Windows VPS hosting stands out. Unlike shared hosting, where resources are distributed among multiple users, VPS hosting allocates dedicated resources to each user. This means faster load times, reduced downtime, and a smoother user experience. For businesses, this translates to enhanced customer satisfaction and improved SEO rankings.

2. Scalability at Its Best

One of the standout features of Windows VPS hosting is its scalability. Whether you're a startup experiencing rapid growth or an established business expanding its digital footprint, VPS hosting allows you to easily upgrade your resources as needed. This flexibility ensures that your hosting service grows with your business, eliminating the need for frequent and costly migrations.

3. Enhanced Security Measures

In an age where cyber threats are a constant concern, security is paramount. Windows VPS hosting provides a higher level of security compared to shared hosting. With isolated environments for each user, the risk of security breaches is significantly minimized. Additionally, many Windows VPS services come with advanced security features such as firewalls, regular backups, and DDoS protection, ensuring your data remains safe and secure.

4. Full Administrative Control

For those who require more control over their hosting environment, Windows VPS hosting offers full administrative access. This means you can customize your server settings, install preferred software, and manage your resources as you see fit. This level of control is particularly beneficial for developers and IT professionals who need a tailored hosting environment to meet specific project requirements.

5. Cost-Effective Solution

Despite its numerous advantages, Windows VPS hosting remains a cost-effective solution. It offers a middle ground between the affordability of shared hosting and the high performance of dedicated hosting. By only paying for the resources you need, you can optimize your budget without compromising on quality or performance.

6. Seamless Integration with Microsoft Products

For businesses heavily invested in the Microsoft ecosystem, Windows VPS hosting provides seamless integration with Microsoft products. Whether it's running applications like SQL Server, SharePoint, or other enterprise solutions, the compatibility and performance of Windows VPS hosting are unparalleled.

In conclusion, Windows VPS hosting services are a critical asset in the modern digital world. They offer unmatched performance, scalability, security, control, and cost-effectiveness, making them an ideal choice for businesses and individuals striving for success online. As the digital landscape continues to evolve, embracing Windows VPS hosting can provide the stability and reliability needed to stay ahead of the curve.

3 notes

·

View notes

Text

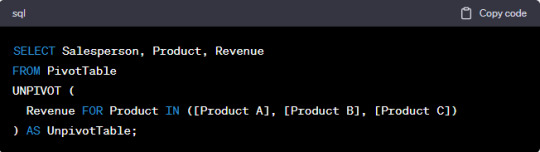

Exploring the Power of Amazon Web Services: Top AWS Services You Need to Know

In the ever-evolving realm of cloud computing, Amazon Web Services (AWS) has established itself as an undeniable force to be reckoned with. AWS's vast and diverse array of services has positioned it as a dominant player, catering to the evolving needs of businesses, startups, and individuals worldwide. Its popularity transcends boundaries, making it the preferred choice for a myriad of use cases, from startups launching their first web applications to established enterprises managing complex networks of services. This blog embarks on an exploratory journey into the boundless world of AWS, delving deep into some of its most sought-after and pivotal services.

As the digital landscape continues to expand, understanding these AWS services and their significance is pivotal, whether you're a seasoned cloud expert or someone taking the first steps in your cloud computing journey. Join us as we delve into the intricate web of AWS's top services and discover how they can shape the future of your cloud computing endeavors. From cloud novices to seasoned professionals, the AWS ecosystem holds the keys to innovation and transformation.

Amazon EC2 (Elastic Compute Cloud): The Foundation of Scalability At the core of AWS's capabilities is Amazon EC2, the Elastic Compute Cloud. EC2 provides resizable compute capacity in the cloud, allowing you to run virtual servers, commonly referred to as instances. These instances serve as the foundation for a multitude of AWS solutions, offering the scalability and flexibility required to meet diverse application and workload demands. Whether you're a startup launching your first web application or an enterprise managing a complex network of services, EC2 ensures that you have the computational resources you need, precisely when you need them.

Amazon S3 (Simple Storage Service): Secure, Scalable, and Cost-Effective Data Storage When it comes to storing and retrieving data, Amazon S3, the Simple Storage Service, stands as an indispensable tool in the AWS arsenal. S3 offers a scalable and highly durable object storage service that is designed for data security and cost-effectiveness. This service is the choice of businesses and individuals for storing a wide range of data, including media files, backups, and data archives. Its flexibility and reliability make it a prime choice for safeguarding your digital assets and ensuring they are readily accessible.

Amazon RDS (Relational Database Service): Streamlined Database Management Database management can be a complex task, but AWS simplifies it with Amazon RDS, the Relational Database Service. RDS automates many common database management tasks, including patching, backups, and scaling. It supports multiple database engines, including popular options like MySQL, PostgreSQL, and SQL Server. This service allows you to focus on your application while AWS handles the underlying database infrastructure. Whether you're building a content management system, an e-commerce platform, or a mobile app, RDS streamlines your database operations.

AWS Lambda: The Era of Serverless Computing Serverless computing has transformed the way applications are built and deployed, and AWS Lambda is at the forefront of this revolution. Lambda is a serverless compute service that enables you to run code without the need for server provisioning or management. It's the perfect solution for building serverless applications, microservices, and automating tasks. The unique pricing model ensures that you pay only for the compute time your code actually uses. This service empowers developers to focus on coding, knowing that AWS will handle the operational complexities behind the scenes.

Amazon DynamoDB: Low Latency, High Scalability NoSQL Database Amazon DynamoDB is a managed NoSQL database service that stands out for its low latency and exceptional scalability. It's a popular choice for applications with variable workloads, such as gaming platforms, IoT solutions, and real-time data processing systems. DynamoDB automatically scales to meet the demands of your applications, ensuring consistent, single-digit millisecond latency at any scale. Whether you're managing user profiles, session data, or real-time analytics, DynamoDB is designed to meet your performance needs.

Amazon VPC (Virtual Private Cloud): Tailored Networking for Security and Control Security and control over your cloud resources are paramount, and Amazon VPC (Virtual Private Cloud) empowers you to create isolated networks within the AWS cloud. This isolation enhances security and control, allowing you to define your network topology, configure routing, and manage access. VPC is the go-to solution for businesses and individuals who require a network environment that mirrors the security and control of traditional on-premises data centers.

Amazon SNS (Simple Notification Service): Seamless Communication Across Channels Effective communication is a cornerstone of modern applications, and Amazon SNS (Simple Notification Service) is designed to facilitate seamless communication across various channels. This fully managed messaging service enables you to send notifications to a distributed set of recipients, whether through email, SMS, or mobile devices. SNS is an essential component of applications that require real-time updates and notifications to keep users informed and engaged.

Amazon SQS (Simple Queue Service): Decoupling for Scalable Applications Decoupling components of a cloud application is crucial for scalability, and Amazon SQS (Simple Queue Service) is a fully managed message queuing service designed for this purpose. It ensures reliable and scalable communication between different parts of your application, helping you create systems that can handle varying workloads efficiently. SQS is a valuable tool for building robust, distributed applications that can adapt to changes in demand.

In the rapidly evolving landscape of cloud computing, Amazon Web Services (AWS) stands as a colossus, offering a diverse array of services that address the ever-evolving needs of businesses, startups, and individuals alike. AWS's popularity transcends industry boundaries, making it the go-to choice for a wide range of use cases, from startups launching their inaugural web applications to established enterprises managing intricate networks of services.

To unlock the full potential of these AWS services, gaining comprehensive knowledge and hands-on experience is key. ACTE Technologies, a renowned training provider, offers specialized AWS training programs designed to provide practical skills and in-depth understanding. These programs equip you with the tools needed to navigate and excel in the dynamic world of cloud computing.

With AWS services at your disposal, the possibilities are endless, and innovation knows no bounds. Join the ever-growing community of cloud professionals and enthusiasts, and empower yourself to shape the future of the digital landscape. ACTE Technologies is your trusted guide on this journey, providing the knowledge and support needed to thrive in the world of AWS and cloud computing.

8 notes

·

View notes

Text

Navigating the Cloud Landscape: Unleashing Amazon Web Services (AWS) Potential

In the ever-evolving tech landscape, businesses are in a constant quest for innovation, scalability, and operational optimization. Enter Amazon Web Services (AWS), a robust cloud computing juggernaut offering a versatile suite of services tailored to diverse business requirements. This blog explores the myriad applications of AWS across various sectors, providing a transformative journey through the cloud.

Harnessing Computational Agility with Amazon EC2

Central to the AWS ecosystem is Amazon EC2 (Elastic Compute Cloud), a pivotal player reshaping the cloud computing paradigm. Offering scalable virtual servers, EC2 empowers users to seamlessly run applications and manage computing resources. This adaptability enables businesses to dynamically adjust computational capacity, ensuring optimal performance and cost-effectiveness.

Redefining Storage Solutions

AWS addresses the critical need for scalable and secure storage through services such as Amazon S3 (Simple Storage Service) and Amazon EBS (Elastic Block Store). S3 acts as a dependable object storage solution for data backup, archiving, and content distribution. Meanwhile, EBS provides persistent block-level storage designed for EC2 instances, guaranteeing data integrity and accessibility.

Streamlined Database Management: Amazon RDS and DynamoDB

Database management undergoes a transformation with Amazon RDS, simplifying the setup, operation, and scaling of relational databases. Be it MySQL, PostgreSQL, or SQL Server, RDS provides a frictionless environment for managing diverse database workloads. For enthusiasts of NoSQL, Amazon DynamoDB steps in as a swift and flexible solution for document and key-value data storage.

Networking Mastery: Amazon VPC and Route 53

AWS empowers users to construct a virtual sanctuary for their resources through Amazon VPC (Virtual Private Cloud). This virtual network facilitates the launch of AWS resources within a user-defined space, enhancing security and control. Simultaneously, Amazon Route 53, a scalable DNS web service, ensures seamless routing of end-user requests to globally distributed endpoints.

Global Content Delivery Excellence with Amazon CloudFront

Amazon CloudFront emerges as a dynamic content delivery network (CDN) service, securely delivering data, videos, applications, and APIs on a global scale. This ensures low latency and high transfer speeds, elevating user experiences across diverse geographical locations.

AI and ML Prowess Unleashed

AWS propels businesses into the future with advanced machine learning and artificial intelligence services. Amazon SageMaker, a fully managed service, enables developers to rapidly build, train, and deploy machine learning models. Additionally, Amazon Rekognition provides sophisticated image and video analysis, supporting applications in facial recognition, object detection, and content moderation.

Big Data Mastery: Amazon Redshift and Athena

For organizations grappling with massive datasets, AWS offers Amazon Redshift, a fully managed data warehouse service. It facilitates the execution of complex queries on large datasets, empowering informed decision-making. Simultaneously, Amazon Athena allows users to analyze data in Amazon S3 using standard SQL queries, unlocking invaluable insights.

In conclusion, Amazon Web Services (AWS) stands as an all-encompassing cloud computing platform, empowering businesses to innovate, scale, and optimize operations. From adaptable compute power and secure storage solutions to cutting-edge AI and ML capabilities, AWS serves as a robust foundation for organizations navigating the digital frontier. Embrace the limitless potential of cloud computing with AWS – where innovation knows no bounds.

3 notes

·

View notes